- CUDALAUNCH BLOCKING HOW TO

- CUDALAUNCH BLOCKING INSTALL

- CUDALAUNCH BLOCKING ANDROID

- CUDALAUNCH BLOCKING WINDOWS

Remote users can access firewall services and features and, with administrative permissions, enable or disable dynamic firewall rules.

CUDALAUNCH BLOCKING WINDOWS

CudaLaunch for Windows and macOSĬudaLaunch offers secure access to resources made available on the CloudGen Firewall.

CUDALAUNCH BLOCKING INSTALL

It is recommended to install CudaLaunch from the platform's app store or marketplace to always use the newest version. CudaLaunch is backward compatible with all firmware versions. For a list of supported devices, see Supported Browsers, Devices and Operating Systems. The CudaLaunch portal's responsive interface is compatible with both desktop and mobile devices.

In addition, CudaLaunch enables administrators to manage dynamic firewall rules and also integrates with the Barracuda VPN Client to connect via client-to-site VPN.

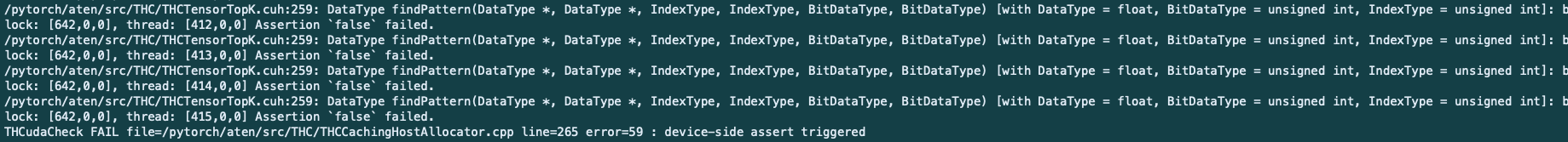

CUDALAUNCH BLOCKING ANDROID

Lernapparat - Copyright © 2016-2020 Dr.CudaLaunch is a Windows, macOS, iOS, and Android application that provides secure access to your organization's applications and data from remote locations and a variety of devices. Now if only we could recover the non-GPU bits of our calculation instead of needing a complete restart. The best part is that this also works for nontrivial examples. In fact, our activations have shape batch x 3, so we only allow for three categories (0, 1, 2), but the labels run to 3! So apparently, the loss does not like what we pass it. RuntimeError: cuda runtime error (59) : device-side assert triggered at /home/tv/pytorch/pytorch/aten/src/THCUNN/generic/ClassNLLCriterion.cu:116 > 1364 return torch._C._nn.nll_loss(input, target, weight, size_average, ignore_index, reduce)ġ366 return torch._C._nn.nll_loss2d(input, target, weight, size_average, ignore_index, reduce) usr/local/lib/python3.6/dist-packages/torch/nn/functional.py in nll_loss(input, target, weight, size_average, ignore_index, reduce)ġ362. > 1474 return nll_loss(log_softmax(input, 1), target, weight, size_average, ignore_index, reduce) usr/local/lib/python3.6/dist-packages/torch/nn/functional.py in cross_entropy(input, target, weight, size_average, ignore_index, reduce) > 1 loss = torch.nn.functional.cross_entropy(activations, labels) With this addition, we get a better traceback:. But this can be solved, too: At the very top of your program, before you import anything (and in particular PyTorch), insert import os But as you can see, I like to use Jupyter for a lot of my work, so that is not as easy as one would like.

CUDALAUNCH BLOCKING HOW TO

This is how to get a good traceback:You can launch the program with the environment variable CUDA_LAUNCH_BLOCKING set to 1.

So what now? If we could only get a good traceback, we should find the problem in no time. But often, we use libraries or have complex things where that isn't an option. One option in debugging is to move things to CPU. Loss = torch.nn.functional.cross_entropy(activations, labels)

Here is the faulty program causing this output: import torchĪctivations = torch.randn(4,3, device=device) # usually we get our activations in a more refined way. So a device-side assert means I just noticed something went wrong somewhere. Well, that is hard to understand, I'm sure that printing my results is a legitimate course of action. RuntimeError: cuda runtime error (59) : device-side assert triggered at /home/tv/pytorch/pytorch/aten/src/THC/generic/THCStorage.cpp:36 RuntimeError Traceback (most recent call last)ġ loss = torch.nn.functional.cross_entropy(activations, labels) The usual symptom is that you get a very non-descript error at a more or less random place somewhere after the instruction that triggered the error. While this behaviour is key to the blazing performance of PyTorch programs, there is a downside: When a cuda operation fails, your program has long gone on to do other stuff. When you run a PyTorch program using CUDA operations, the program usually doesn't wait until the computation finishes but continues to throw instructions at the GPU until it actually needs a result (e.g.

Here is a little trick to debug your programs. Sometimes, however, the asynchronous nature of CUDA execution makes it hard. The beautiful thing of PyTorch's immediate execution model is that you can actually debug your programs.

0 kommentar(er)

0 kommentar(er)